Motivation and Method

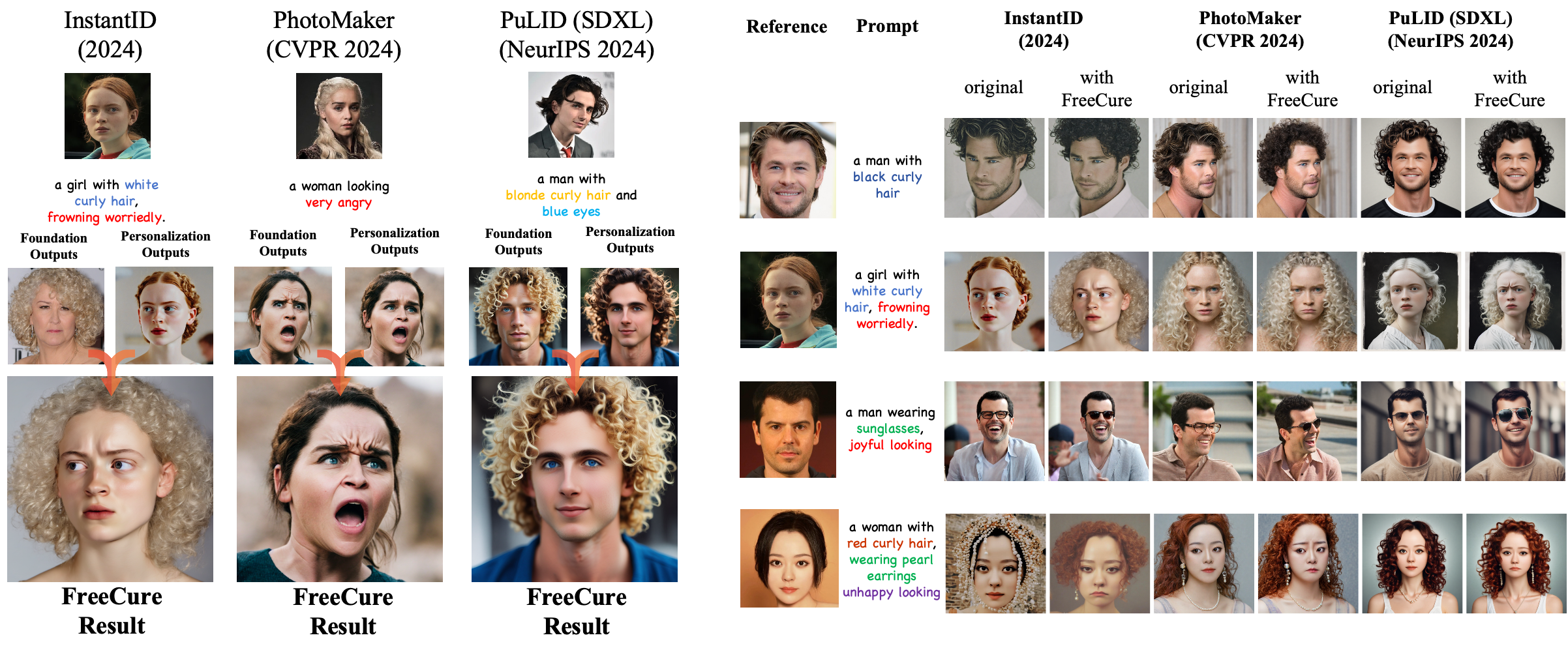

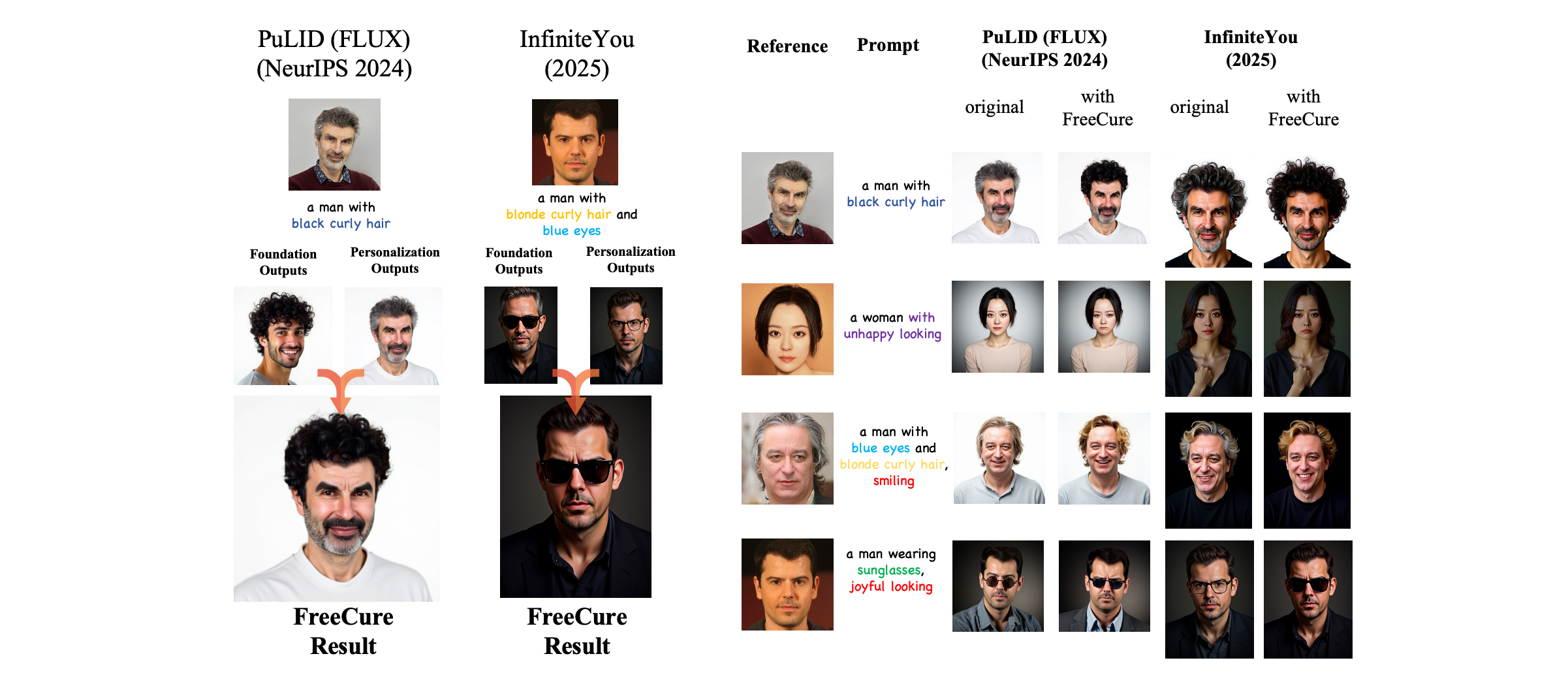

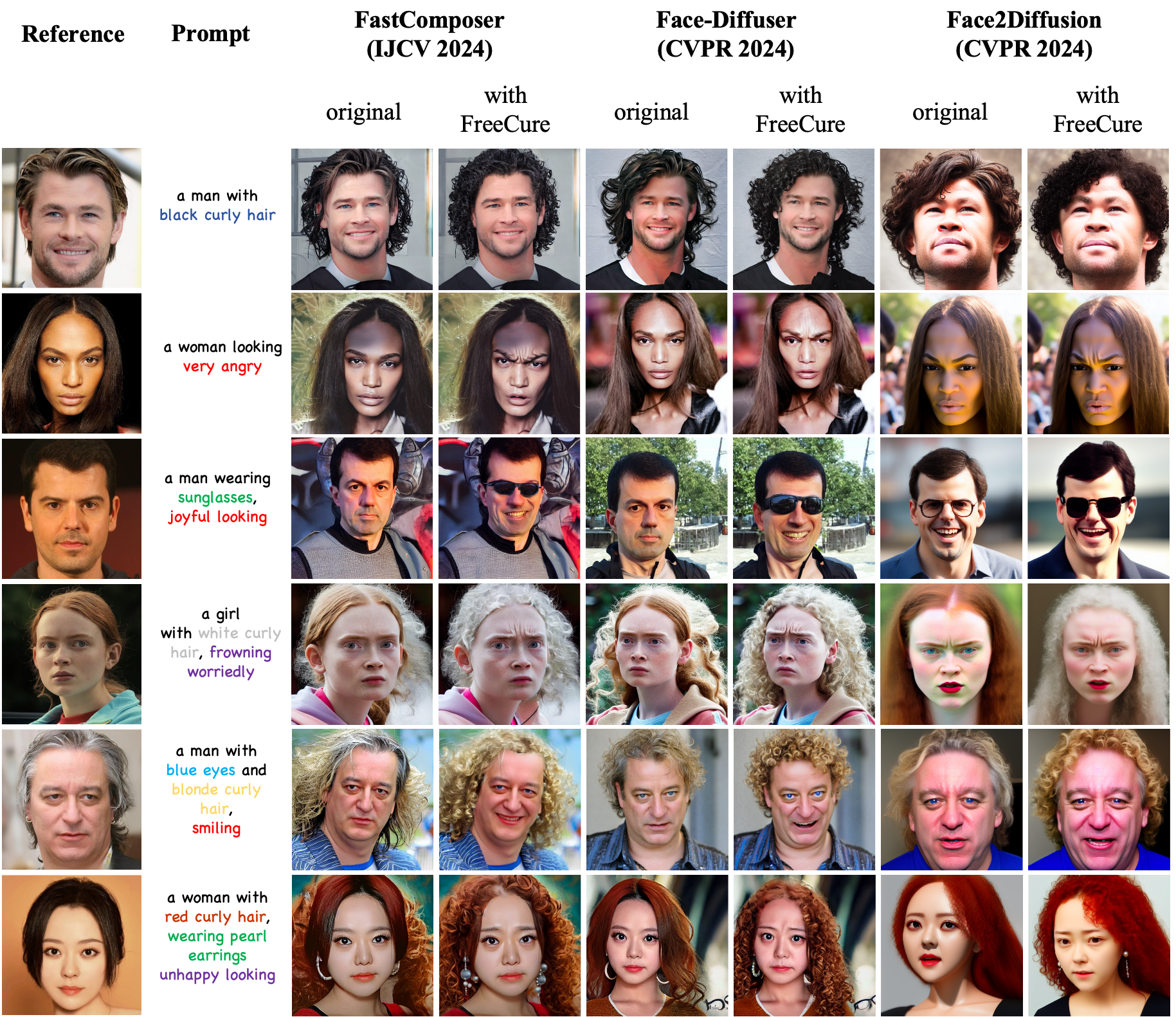

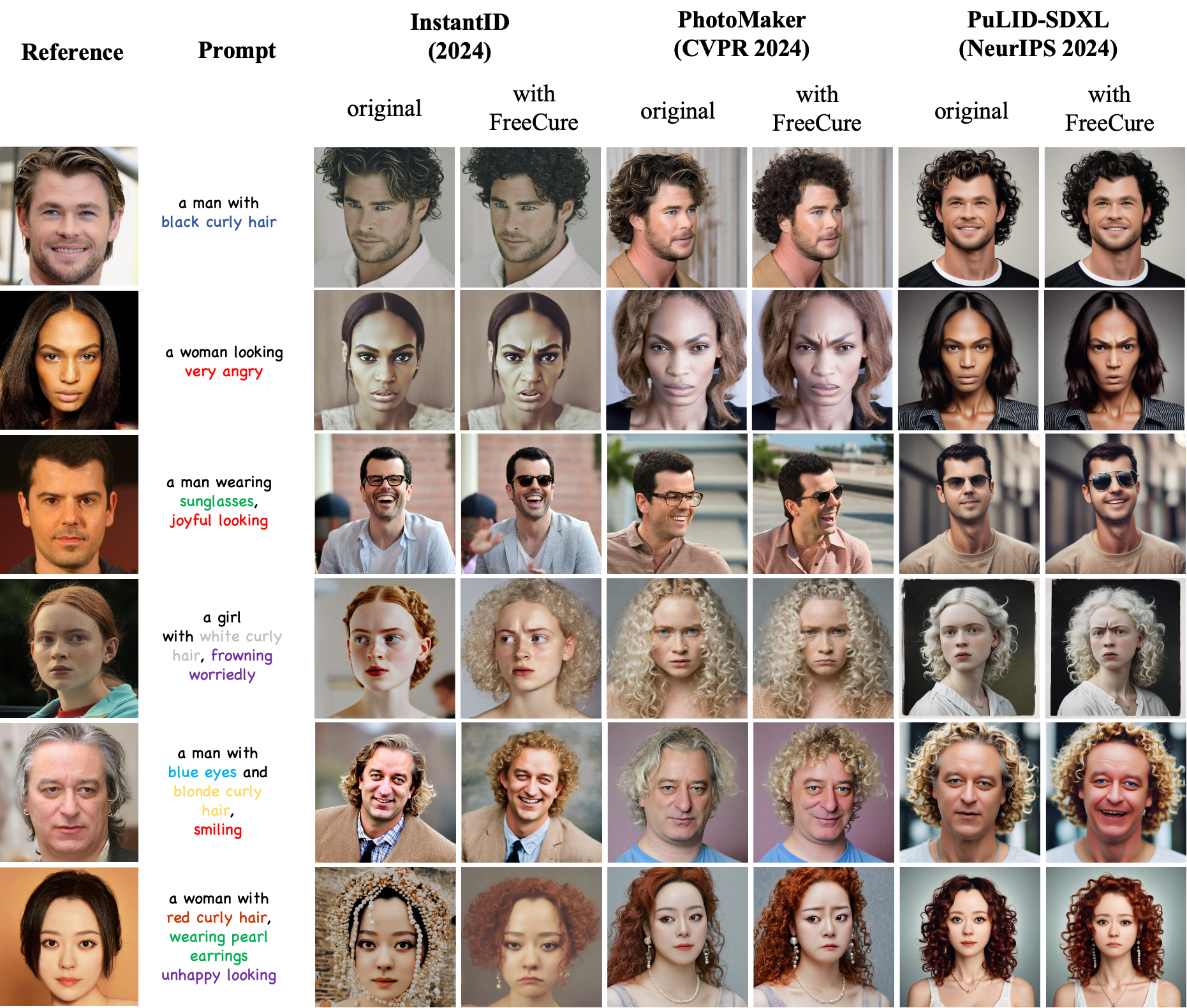

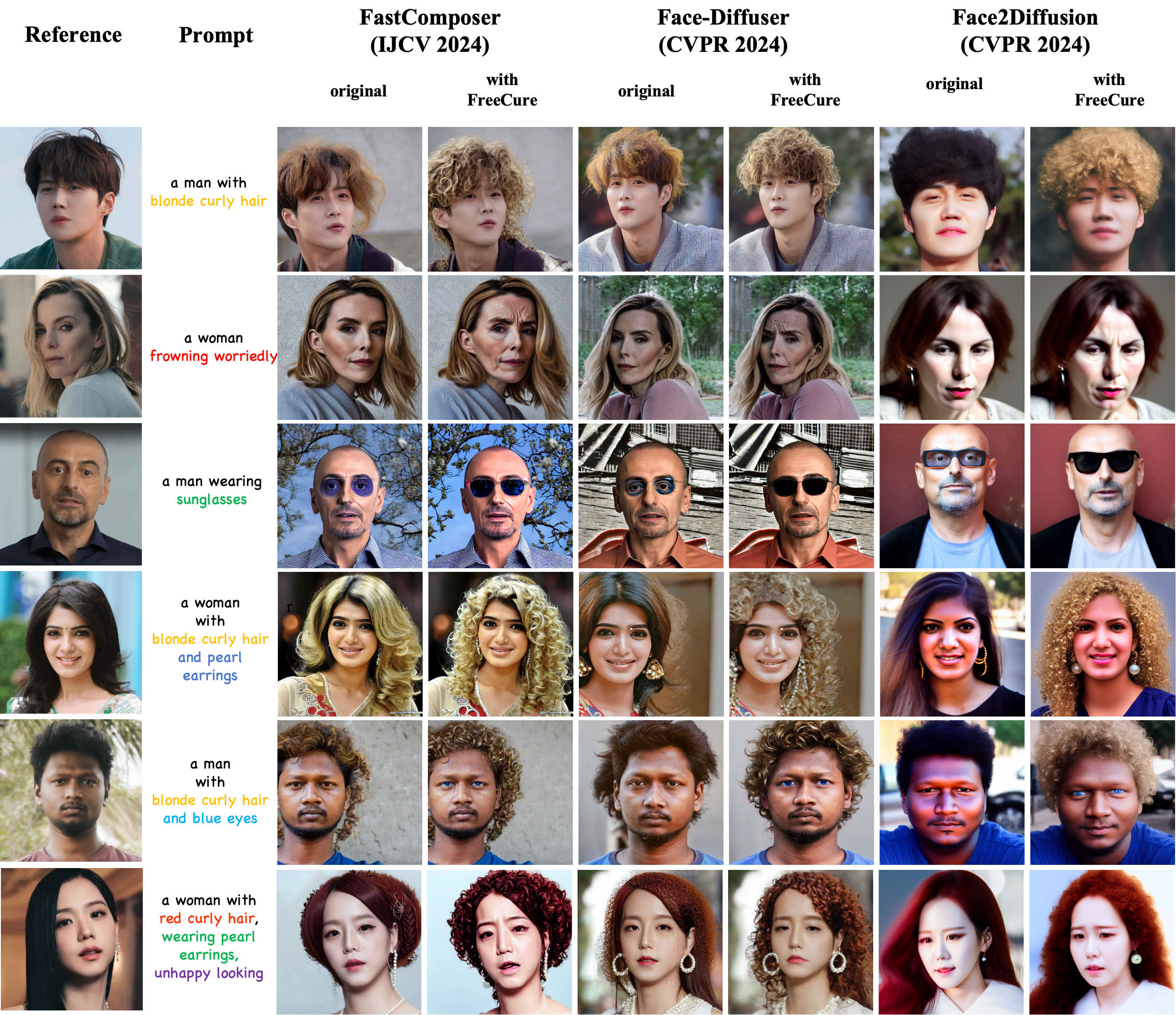

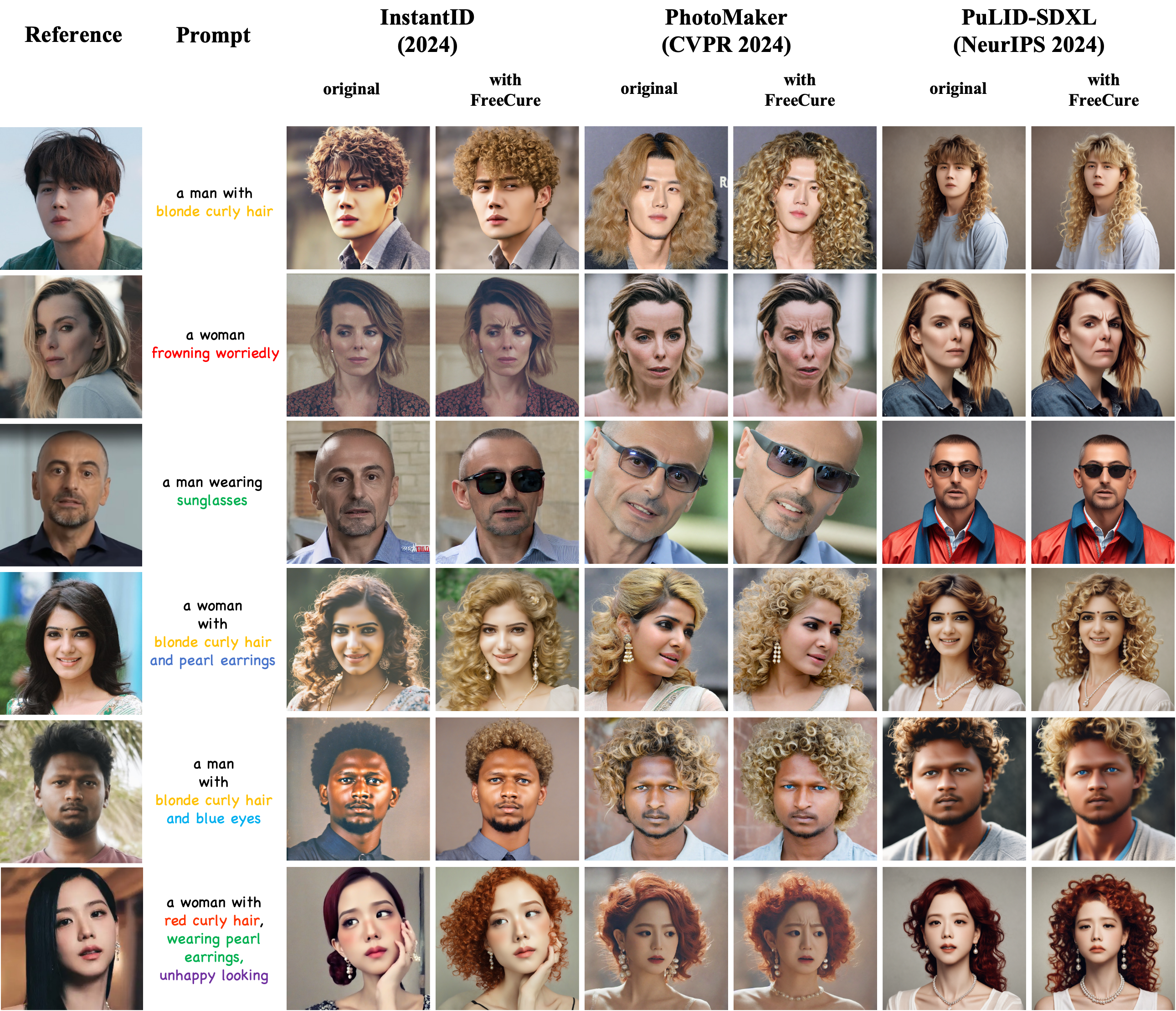

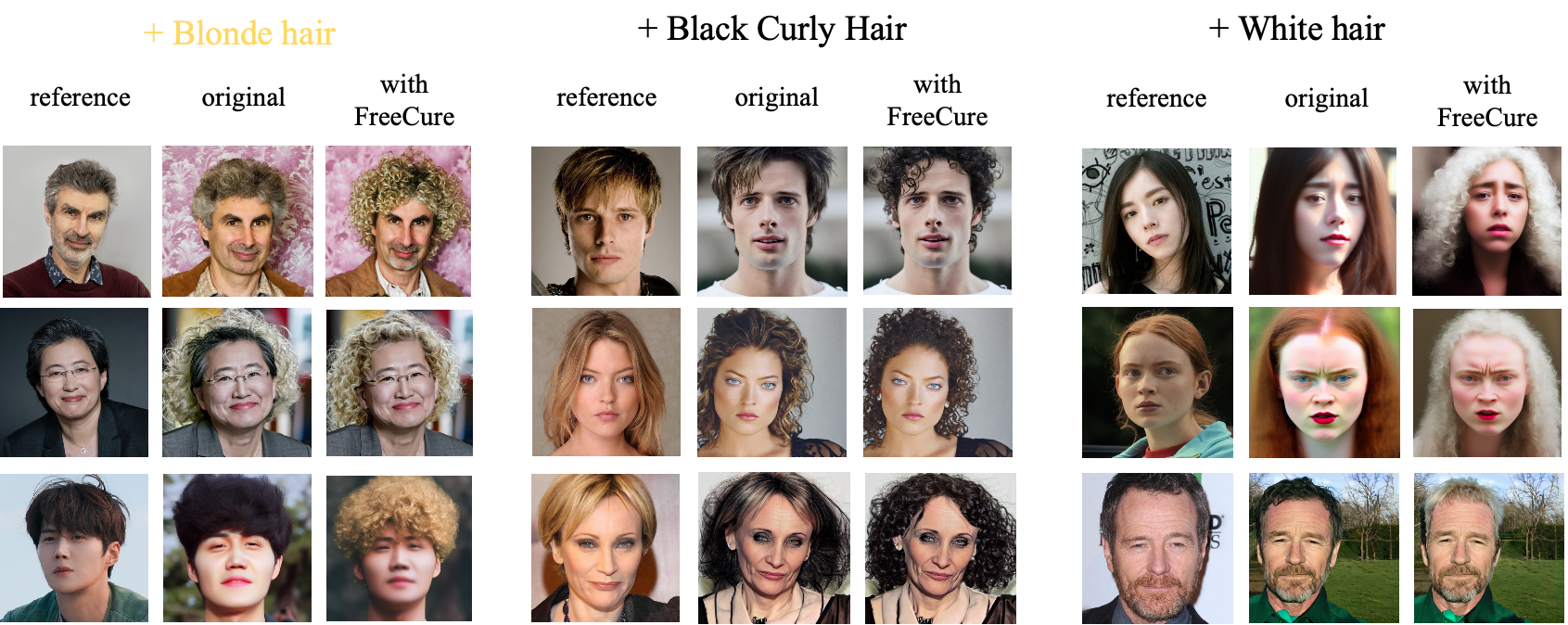

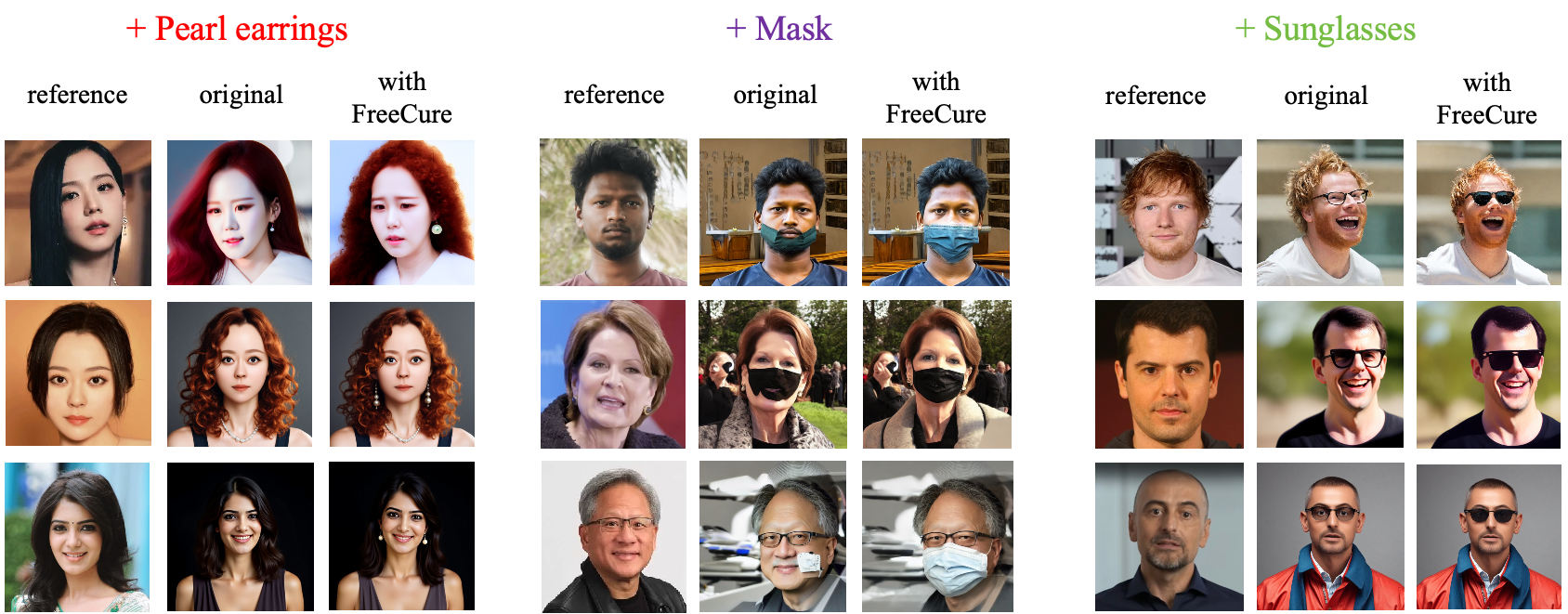

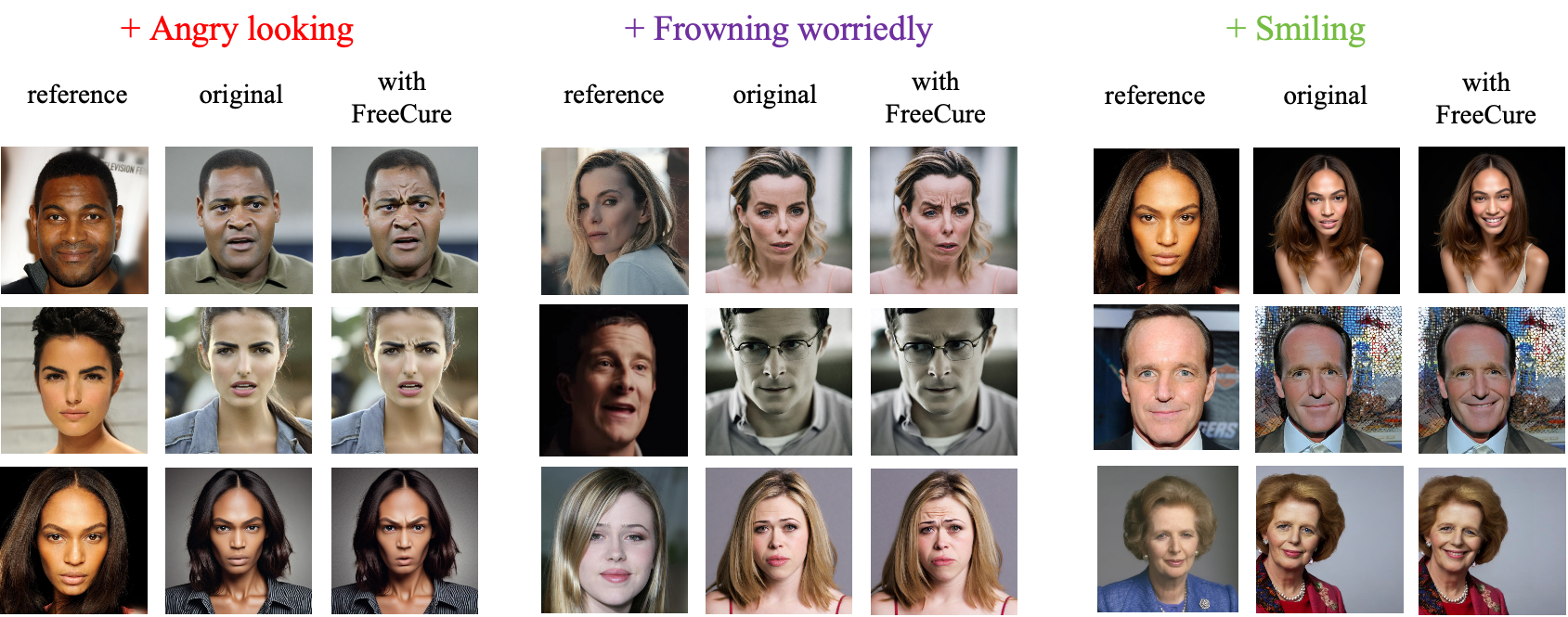

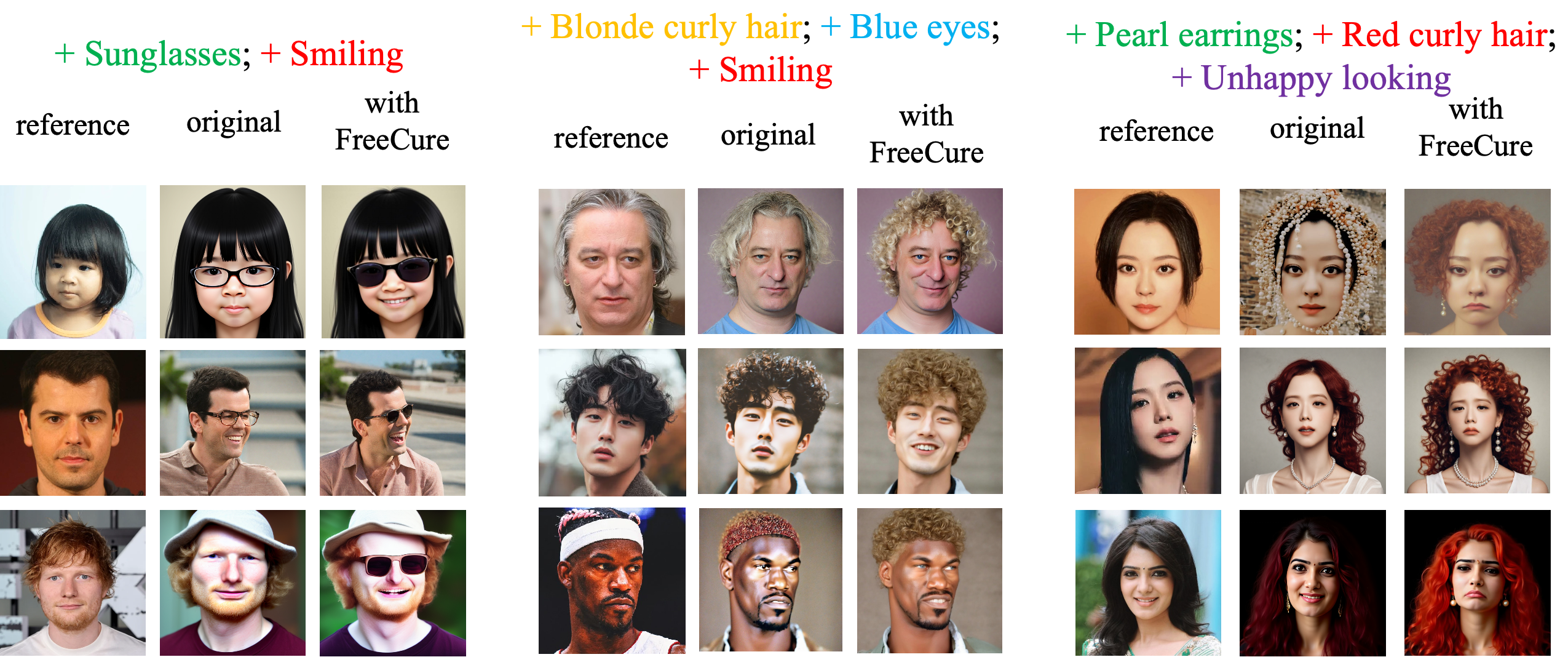

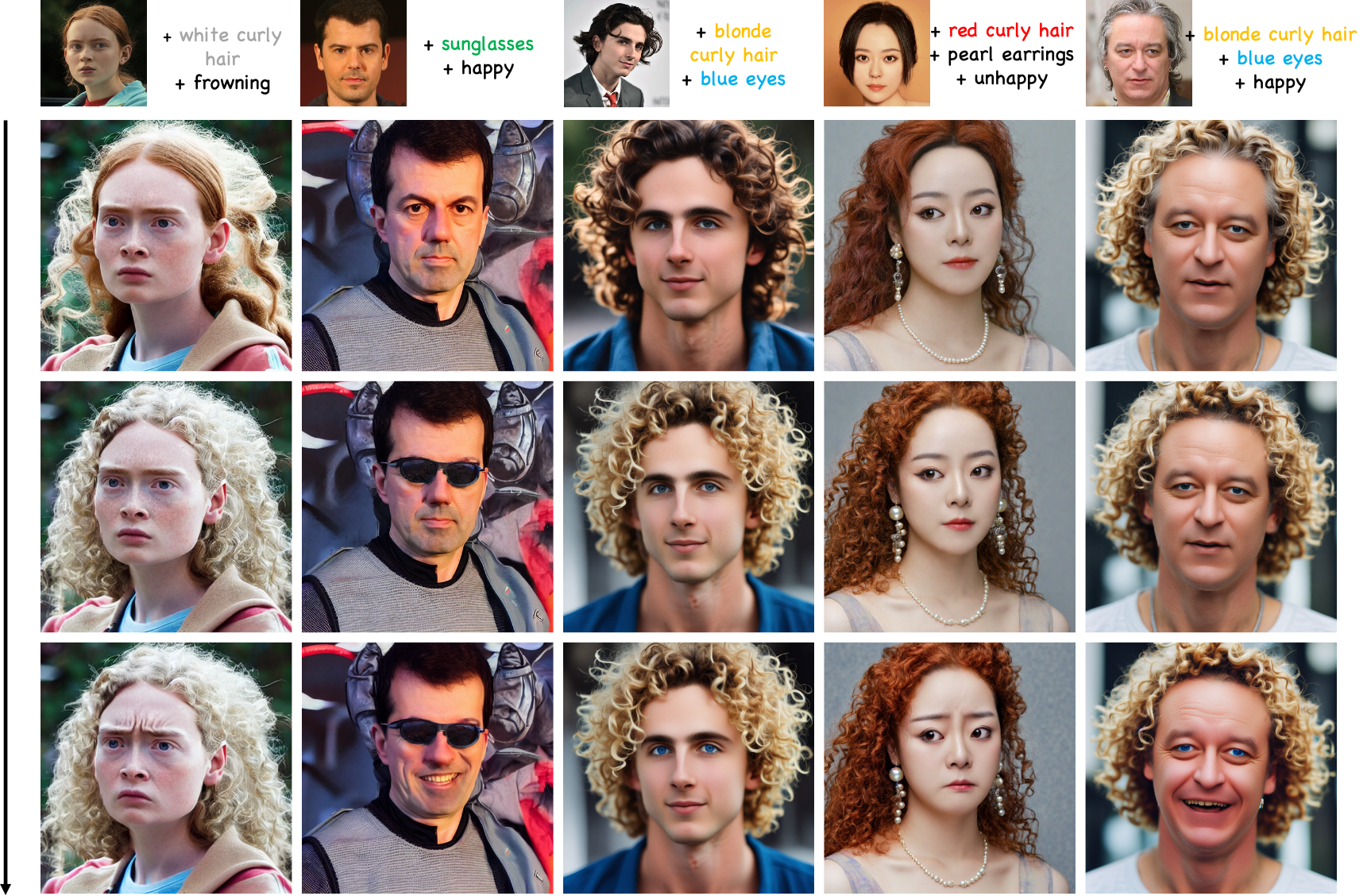

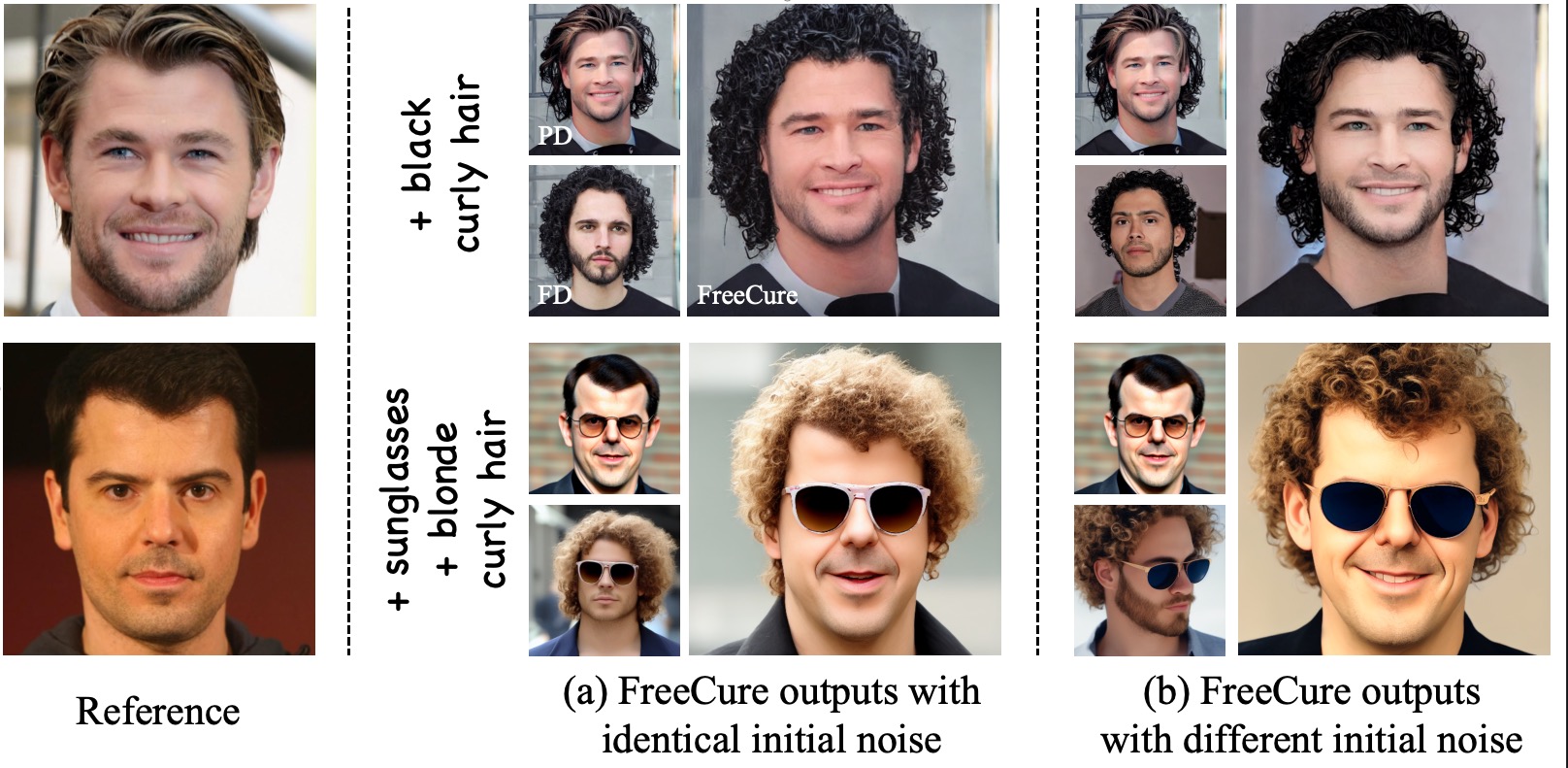

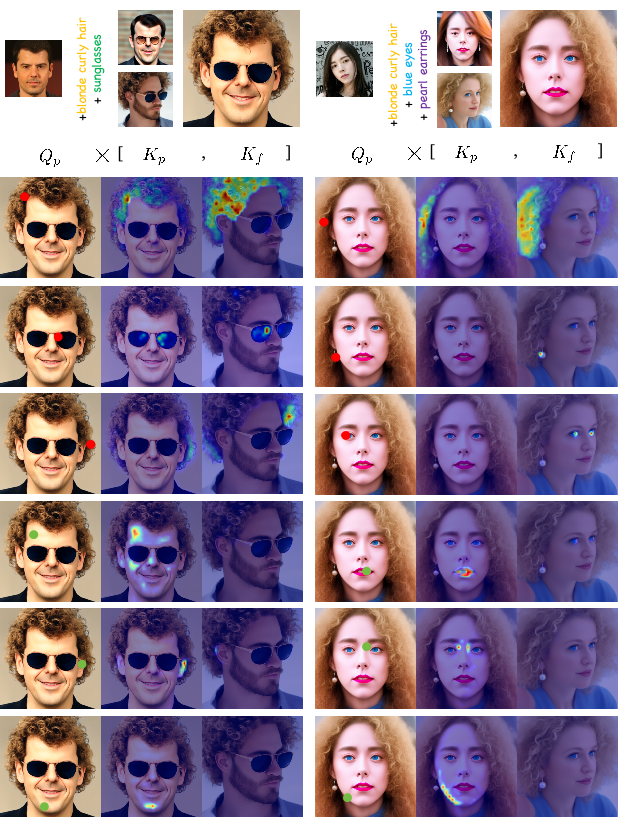

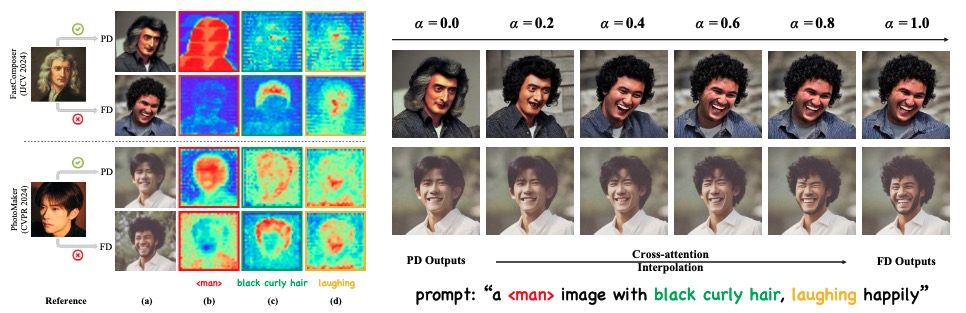

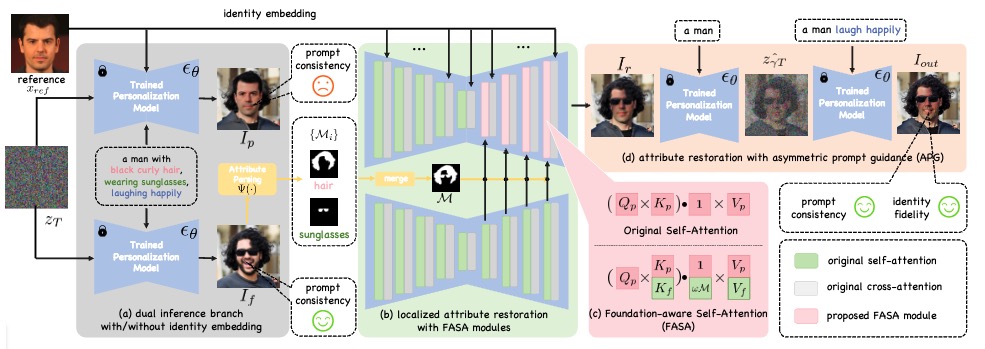

We analyze the challenges current methods face in achieving precise control over facial features. The key issue lies in how personalization models process identity data: their cross-attention layers focus too much on identity-related tokens while undermining those corresponding to facial attributes (e.g., hairstyle, expression, see the left part). Consequently, these attributes become difficult to manipulate. However, these cross-attention layers (or adapters) are critical for preserving identity accuracy in the generated output, making them resistant to modification. Adjusting the cross-attention maps frequently leads to the loss of essential identity characteristics (see the right parts).

While keeping cross-attention modules intact, we propose a novel foundation-aware self-attention (FASA), enabling attributes with high prompt consistency to replace those that are ill-aligned during personalization generation. To protect the identity unharmed, this strategy also leverages semantic segmentation models to generate the scaling masks of these attributes, therefore making such replacement happen in a highly localized and harmonious manner. Furthermore, we use a simple but effective approach called asymmetric prompt guidance (APG) to restore abstract attributes such as expression.